When you hear open source software, what do you think of? Software developers hunched over keyboards, fueled by sugar and caffeine, or a recent request by your IT department to try new “free” software? It’s both of those of things, and much, much more.

Behind open source, there is a strong culture where presentation of patterns and models for debate is championed. Open source may have started as a description for software source code and a development model, but it has moved far beyond that. It is the challenge to approach the world in an innovative way, looking for solutions that break from tradition, and doing so in a collaborative environment where transparency of process is the most important virtue.

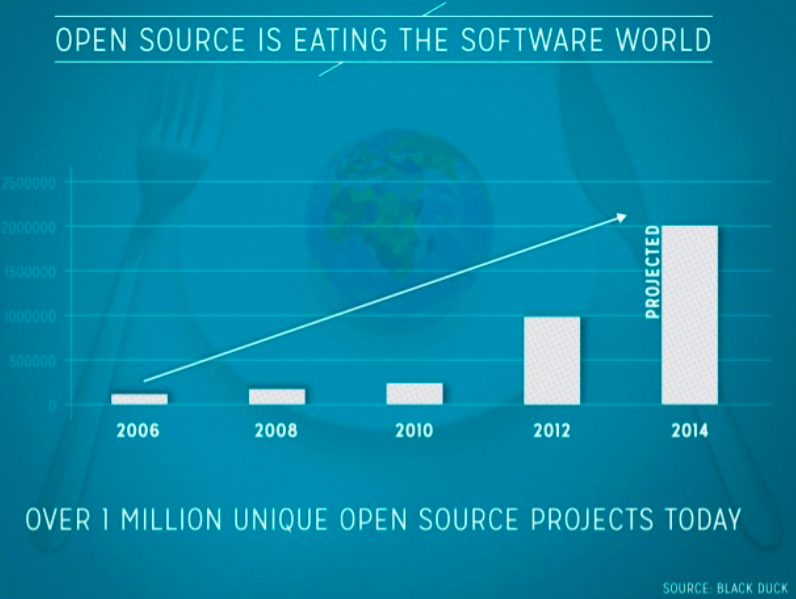

You may not be certain what open source is, but you use it, and it is everywhere. Successful modern enterprises have come to rely on open source software (OSS.) In the realm of infrastructure, open source provides benefits on the axes of cost, quality, speed, and risk mitigation through the support of a wide community of contributors. In addition, these organizations have found it can avoid getting too far ahead of the pack, by opening up their innovations to collaboration with others in the market quickly. The list of names is long, with proponents in the ranks of hardcore tech firms like RedHat and Cloudera, but also popular businesses such as Facebook, LinkedIn, and Netflix.

Netflix has become known for not just streaming movies, but by providing its suite of in-house developed cloud management tools as free open source components. Organizations as large as IBM have heralded Netflix for this move as it has accelerated their ability to innovate. Netflix’s cloud development solutions, such as ChaosMonkey for cloud testing and Asgard for deployment, are forcing cloud leaders like Amazon to push the boundaries of their offerings, lest they lose ground to newer competitors.

Without open source, LinkedIn would not have gained its success and become a social networking platform used by nearly every professional. Utilized as a big data analytics tool, open source is allowing LinkedIn to dominate its space. This is not done blindly, as the company states open source helps it in “reaching out to the best, brightest, and most interested individuals to explore what’s new and help us further build our components.” This is despite that LinkedIn included in a 2011 registration filing its risks associated with OSS, specifically related to potential exposure to open source-licensing claims. These issues signify that while there are serious risks that can come with improper management of OSS licenses, for businesses these can be a tradeoff with the rewards of being early to market.

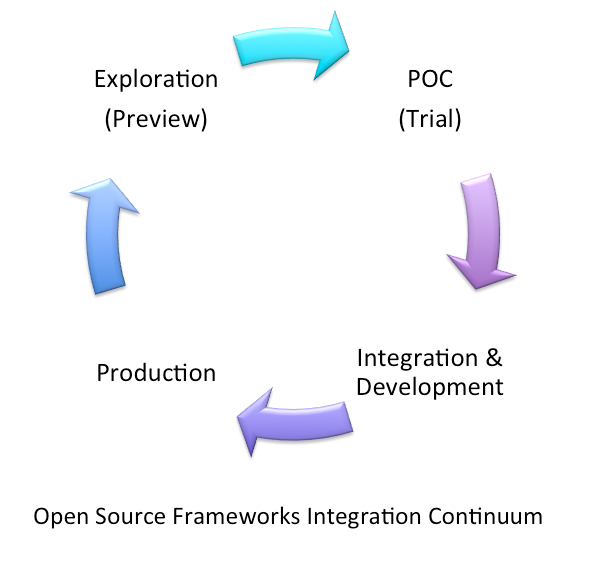

Recently ThoughtWorks, the company I work for, announced that its Go software will now be freely available under the Apache 2.0 open source license. This was done as a conscious display of ThoughtWorks’ desire to continue to be a recognized innovator next to the other cutting-edge, global solution providers. We understand that open source simply creates better software, creating an environment of collaboration where the best technology is developed and everyone wins. It is through the open source model that higher-quality; more secure, more easily integrated software is created, and done at greatly accelerated pace, often at a lower cost.

ThoughtWorks embraced open source development in its early days, turning to agile methodology pioneers Ward Cunningham and Martin Fowler (current ThoughtWorks chief scientist) in 1999 to help guide a stalled project. During that endeavor, many innovations that would later make their way into the popular Framework for Integrated Test (FIT) were developed. More notably, however, was the development of a piece of software based upon the extreme programming practice of continuous integration. CruiseControl from ThoughtWorks, debuted within the following year, was the first continuous integration server in the market that led the way for nearly a decade as engineering teams began to embrace the practice as a standard.

Fundamentally, the reason why open source has flourished and produced incredible innovations in technology is the culture it brings to the table. With OSS, organizations can continuously improve and deliver quality software. Today’s hypercompetitive business environment requires rapid innovation and maintaining a steady flow of the most important work between all roles. A free-flowing, collaborative, and creative environment provides the possibility to get ahead of the competition and make the software release process a business advantage.

So, what is open source? It is the ability to revolutionize processes and industries, and create positive change inside and out.